本文为清华大学”模式识别与机器学习”课程的复习笔记($\text{Full Version}$)。

Evaluation Metric

k-NN

Nearest Neighbor

For a new instance $x’$, its class $\omega’$ can be predicted by:

k-Nearest Neighbor

For a new instance $x$, define $g_i(x)$ as: the number of $x$’s k-nearest instances belonging to the class $\omega_i$.

Then the new instance’s class $\omega’$ can be predicted as:

k-NN Improvements

Branch-Bound Algorithm

Use tree structure to reduce calculation.

Edit Nearest Neighbor

Delete nodes that may be misguiding from the training instance set.

Condensed Nearest Neighbor

Delete nodes that are far away from decision boundaries.

The Curse of Dimensionality

Problem

- Many irrelevant attributes

- In high-dimensional spaces, most points are equally far from each other.

Solution

- Dimensionality reduction techniques

- manifold learning

- Feature selection

- Use prior knowledge

Linear Regression (Multivariate ver.)

For a multivariate linear regression, the function becomes $y_i = \mathbf{w}^{\rm T}\mathbf{x}_i$ , where:

, We adjust the values of $\mathbf{w}$ to find the equation that gives the best fitting line $f(x) = \mathbf{w}^{\rm T}\mathbf{x}$

We find the best $\mathbf{w}^*$ using the Mean Squared Loss:

So that $\mathbf{w}^{\star} $ must satisfy $\mathbf {X^{\rm T}} \mathbf {Xw^{\star}} = \mathbf X^{\rm T}\mathbf y$ , so we get $\mathbf{w^{\star}} = (\mathbf {X^{\rm T}X})^{-1}\mathbf X^{\rm T}\mathbf y$ or $\mathbf{w^{\star}} = (\mathbf {X^{\rm T}X} + \lambda \mathbf I)^{-1}\mathbf X^{\rm T}\mathbf y$ (Ridge Regression)

Linear Discriminant Analysis

project input vector $\mathbf x \in \mathbb{R}^{d+1}$ down to a 1-dimensional subspace with projection vector $\mathbf w$

The problem is how do we find the good projection vector? We have Fisher’s Criterion, that is to maximize a function that represents the difference between-class means, which is normalized by a measure of the within-class scatter.

We have between-class scatter $\tilde{S}_b = (\tilde{m}_1 - \tilde{m}_2)^2$, where $\tilde{m}_i$ is the mean for the i-th class. Also we have within-class scatter $\tilde{S}_i=\sum_{y_j \in \mathscr{y}_{i}} (y_j - \tilde{m}_i)^2$, then we have total within-class scatter $\tilde{S}_w = \tilde{S}_1+ \tilde{S}_2$. Combining the 2 expressions, the new objective function will be $J_F(\mathbf w) = \frac{\tilde{S}_b}{\tilde{S}_w}$

We have $\tilde{S}_b = (\tilde{m}_1 - \tilde{m}_2)^2 = (\mathbf w^{\rm T} \mathbf m_1 - \mathbf w^{\rm T} \mathbf m_2)^2 = \mathbf w^{\rm T} (\mathbf m_1 - \mathbf m_2)(\mathbf m_1 - \mathbf m_2)^{\rm T} \mathbf w = \mathbf w^{\rm T} \mathbf S_b \mathbf w$, also $\tilde{S}_w = \mathbf w^{\rm T} \mathbf S_w \mathbf w$, so now optimize objective function $J_F$ w.r.t $\mathbf w$:

Use Lagrange Multiplier Method we obtain: $\lambda w^{\star} = \mathbf{S}_W^{-1} (\mathbf m_1 - \mathbf m_2)(\mathbf m_1 - \mathbf m_2)^{\rm T}\mathbf w^{\star}$, since we only care about the direction of $\mathbf w^*$ and $(\mathbf m_1 - \mathbf m_2)^{\rm T}\mathbf w^{\star}$ is scalar, thus we obtain $w^{\star} = \mathbf{S}_W^{-1} (\mathbf m_1 - \mathbf m_2)$

Logistic Regression

Logistic regression is a statistical method used for binary classification, which means it is used to predict the probability of one of two possible outcomes. Unlike linear regression, which predicts a continuous output, logistic regression predicts a discrete outcome (0 or 1, yes or no, true or false, etc.).

Key Concepts

Odds and Log-Odds:

- Odds: The odds of an event are the ratio of the probability that the event will occur to the probability that it will not occur.

- Log-Odds (Logit): The natural logarithm of the odds.

Logistic Function (Sigmoid Function):

- The logistic function maps any real-valued number into the range (0, 1), making it suitable for probability predictions.

- In logistic regression, $ z $ is a linear combination of the input features.

Model Equation:

- The probability of the positive class (e.g., $ y=1 $) is given by the logistic function applied to the linear combination of the features.

- The probability of the negative class (e.g., $ y=0 $) is:

Decision Boundary:

- To make a binary decision, we typically use a threshold (commonly 0.5). If $ P(y=1|x) $ is greater than 0.5, we predict the positive class; otherwise, we predict the negative class.

Training the Model

We use MLE(Maximum Likelihood Estimation) for logistic regression:

Applying negative log to the likelihood function, we obtain the log-likelihood for logistic regression. =

Substituting $y_i \in \{0, +1\}$ with $\tilde y_i \in \{-1, +1\}$, and noting that $\theta(-s) + \theta(s) = 1$, we can simplify the previous expression:

This is called the Cross Entropy Loss.

Generalization to K-classes

The generalized version of logistic regression is called Softmax Regression.

The probability of an input $x$ being class $k$ is denoted as:

In multiclass, the likelihood function can be written as:

We can use the minimum negative log-likehood estimation:

Perceptron

We predict based on the sign of $y$: $y = \text{sign}(f_{\mathbf w}(x)) = \text{sign}(\mathbf w^{\rm T}\mathbf x)$

For Perceptron the objective loss function is defined as:

where $\mathcal{X}^k$ is the misclassified sample set at step $k$.

We can use gradient descent to solve for $\mathbf w^*$:

Support Vector Machine

We want the optimal linear separators, that is the most robust classifier to the noisy data, meaning it has the largest margin to the training data. So we want to find the classifier with the largest margin.

Modeling(For Linear-Separable Problem)

We want the margin is largest: $\max\limits_{\mathbf w, b}\rho(\mathbf w, b)$, and all the datapoints are classified correctly, that is $y_i \cdot (\mathbf w^{\rm T}\mathbf x_i + b) \geq 1$.

The distance between two paralleled hyperplanes is: $|b_1 - b_2| / ||a||$, and the distance between a point $\mathbf x_0$ and a hyperplane $(\mathbf w, b)$ is $|\mathbf w^{\rm T} \mathbf x_0 + b| / ||\mathbf w||$.

Choose the points that are closest to the classifier, and they satisify: $|\mathbf w^{\rm T} \mathbf x_0 + b| = 1$, so that margin $\rho$ = $|\mathbf w^{\rm T} \mathbf x_1 + b| / ||\mathbf w|| + |\mathbf w^{\rm T} \mathbf x_2 + b| / ||\mathbf w|| = 2 / ||\mathbf w||$.

Thus we got the Hard-margin Support Vector Machine:

s.t. $y_i \cdot (\mathbf w^{\rm T}\mathbf x_i + b) \geq 1, 1 \leq i \leq n$

For compute convenience, we convert it into

s.t. $y_i \cdot (\mathbf w^{\rm T}\mathbf x_i + b) \geq 1, 1 \leq i \leq n$

Modeling(For Linearly Non-Separable Problem)

We add a slack that allows points to be classified on the wrong side of the decision boundary, also we add a penalty. So we got the Soft-margin SVM:

s.t. $y_i \cdot (\mathbf w^{\rm T}\mathbf x_i + b) \geq 1 - \xi_i, 1 \leq i \leq n$

Using hinge-loss $\ell_{\text{hinge}}(t) = \max(1-t, 0)$, we have the final version of Soft-margin SVM:

Optimization For Training

Lagrangian Function & KKT Condition

Consider a constrained optimization problem

The Lagrangian function $L(x, \mu)$ is defined as:

We have KKT conditions(necessary condition): for $1 \leq j \leq J$

- Primal feasibility: $g_j(x) \leq 0$

- dual feasibility: $\mu_i \geq 0$

- Complementary slackness: $\mu_i g_j(x^*) = 0$

- Lagrangian optimality: $\nabla_x L(x_*, \mu) = 0$

Dual Problem For Soft-margin SVM

For Soft-margin Support Vector Machine:

s.t. $y_i \cdot (\mathbf w^{\rm T}\mathbf x_i + b) \geq 1 - \xi_i, \xi_i \geq 0, 1 \leq i \leq n$

We have the Lagrangian function(with $2n$ inequality constraints):

s.t. $\alpha_i \geq 0, \mu_i \geq 0, \, i = 1, \ldots, n$.

take the partial derivatives of Lagrangian w.r.t $\mathbf w, b, \xi_i$ and set to zero

So that we got:

So we have the Dual Problem of Soft-SVM:

s.t. $\sum_{i=1}^{n} \alpha_i y_i = 0, \quad 0 \leq \alpha_i \leq C, \, i = 1, \ldots, n.$

After solving $\alpha$, we can get $\mathbf{w} = \sum_{j=1}^n\alpha_j y_j x_j$ and $b$

Kernel Method for SVM

Linear SVM cannot handle linear non-separable data. So we need to map the original feature space to a higher-dimensional feature space where the training set is separable.

Basically we could set $x \to \phi(x)$, but calculating $x_i \dots x_j$ will cause heavy computation cost, so we use the kernel trick, that is to find a function $k(x_i, x_j) = \phi(x_i) \dots \phi(x_j)$.

Some commonly used kernel:

Linear Kernel:

Polynomial Kernel:

Radial Basis Function Kernel (a.k.a. RBF kernel, Gaussian kernel):

Sigmoid Kernel:

Kernel tricks can also be applied to more algorithms, such as k-NN, LDA, etc.

Decision Tree

We use a tree-like structure to deal with categorical features.

For each node, we find the most useful feature, that means the feature that can better divide the data on the node.

ID3 Algorithm

We use entropy as criterion:

A good split gives minimal weighted average entropy of child nodes:

For any split, the entropy of the parent node is constant. Minimizing the weighted

entropy of son nodes is equivalent to maximizing the information gain (IG):

C4.5 Algorithm

Information Gain is highly biased to multivalued features. So we use Information Gain Ratio (GR) to choose optimal feature:

Intrinsic Value (IV) is to punish multivalued features. For a selected feature $f$, its Intrinsic Value is:

where $V$ is the set of all possible values of the feature $f$, and $F_k$ is the subset of $D$ where the value of the feature $A$ is $k$. Features with many possible values tend to have a large Intrinsic Value.

Classification and Regression Tree(CART)

The CART Tree muse be a binary tree.

Regression Tree

How to divide the regions $R = \{R_1, \dots, R_m\}$ and decide the values $V = \{v_1, \dots, v_m\}$?

We use minimum mean-square error over all examples $x_i$ with label $y_i$

Assuming that R has been determined and first find the optimal V. For a given region R_j, the value $v_j$ to minimize the loss is the average value of the labels of all samples belonging to region $R_j$:

Now for each feature $A$ and split threshold $a$, the parent node $R$ is split by $(A, a)$ to $R_1$ and $R_2$. We choose $(A, a) over all possible values to minimize:

where $v_1(A, a)$ and $v_2(A, a)$ are described above.

Classification Tree

The split criteria is now Gini Index:

We choose the feature $A$ and the threshold $a$ over all possible values with the

maximal gain

Ensemble Learning

Reduce the randomness (variance) by combining multiple learners.

Bagging(Bootstrap Aggregating)

- Create $M$ bootstrap datasets

- Train a learner on each dataset

- Ensemble $M$ learners

Uniformly sample from the original data D with replacement. The bootstrap dataset

has the same size as the original data D, the probability of not showing up is

We use the elements show up in $D$ but not in the bootstrap dataset as the validation set(The out-of-bag dataset).

Random Forest

Ensemble decision trees (Training data with $d$ features)

- Create bootstrap datasets

- During tree construction, randomly sample $K (K<d)$ features as candidates for each split. (Usually choose $K = \sqrt d$)

Use feature selection to make treees mutally independent and diverse.

Boosting

Boosting: Sequentially train learners. Current Weak learners focus more on the

examples that previous weak learners misclassified.

Weak classifiers $h_1, \cdots, h_m$ are build sequentially. $h_m$ outputs ‘$+1$’ for one

class and ‘$-1$’ for another class.

Classify by $g(x) = \text{sgn}(\sum \alpha_m h_m(x))$

AdaBoost

Core idea: give higher weights to the misclassified examples so that half of the

training samples come from incorrect classifications. (re-weighting)

Mathematical Formulation:

Weighted Error:

Alpha Calculation:

Weight Update:

Final Hypothesis:

Gradient Boosting

View boosting as an optimization problem. The criterion is to minimize the empirical loss:

Loss function $l$ depends on the task:

- Cross entropy for multi-classification

- $\text{L2}$ loss for regression

We use sequential training: optimize a single model at a time, that is freeze $h_1, \cdots, h_{t-1}$ and optimize $h_t$. (Let $f_{t-1}(x) = \sum_{s=1}^{t-1} \alpha_s h_s(x)$, denoting the ensemble of $t-1$ learners.)

Now let’s see how to choose the $\alpha_t$ and $h_t$, we define:

Consider function $F(u) = \sum_{i=1}^n l(y_i, u_i)$, then the original objective is equivalent to find a direction $\Delta u$ and step size $\alpha$ at the point $u$

to minimize:

According to Gradient Descent, we could let $\delta u = \nabla_u F(u)$, thus

Then how to decide $\alpha_t$? Use one-dimensional search $(y_i, x_i, f_{t-1}, h_t \text{ is fixed})$

For simplicity, search of optimal multiplier can be replaced by setting it a constant.

In conclusion, Gradient Boosting = Gradient Descent + Boosting.

Learning Theory

Empirical Risk Minimization (ERM)

Empirical Risk: The average loss of the model $f$ on training set $\mathcal D = \{x_i, y_i\}^N_{i=1}$

Empirical Risk Minimization(ERM): The learning algorithm selects the model that minimizes the empirical risk on the training dataset.

The Consistency of Learning Process

We say a learning process is consistent, if the minimizer for empirical risk at

the infinite data limit, converges to the minimum expected risk.

Overfitting and Bias-Variance Trade-off

Define the Population Loss (also called Expected Risk) as

Therefore define the Generalization Gap as: $R(f) - \hat R(f)$

There are two important concepts of predicting model

- Bias: The assumptions of target model, represents the extent to which the

average prediction over all datasets differs from the desired function. - Variance: The extent of change for the model when the training data changes

(can be understood as “stability” to dataset change).

Bias-Variance Trade-off : There is an intrinsic contradict between bias and variance. The model’s test error contains the sum of both.

Bias-Variance Decomposition :

Suppose the ground truth function is $f^*$, the data distribution is $\mu$, the algorithm $\mathcal{A}$ learns from hypothesis space $\mathcal{H}$. We use $y(x; \mathcal{D}) = \mathcal{A}(\mathcal{D}, \mathcal{H})(x)$ to denote the output of ERM model $\hat{f} = \mathcal{A}(\mathcal{D}, \mathcal{H})$ on input $x$.

We are interested in the learned model’s prediction error on any $x$, namely

Taking expectation over all possible datasets $\mathcal{D}$, the last term is zero.

Regularization refers to techniques that are used to calibrate machine learning

models in order to prevent overfitting, which picks a small subset of solutions

that are more regular (punish the parameters for behaving abnormally) to

reduce the variance.

Generalization Error and Regularization

VC dimension

VC dimension is a measure of complexity for a certain hypothesis class:

The largest integer $d$ for a binary classification hypothesis class $\mathcal H$, such that

there exists 𝑑 points in the input space 𝒳 that can be perfectly classified by some

function $h \in \mathcal H$ no matter how you assign labels for these $d$ points.

VC dimension characterizes the model class’s capacity for fitting random labels.

Generalization Error Bound

If a hypothesis class $\mathcal{H}$ has VC dimension $d_{vc}$, we have a theorem that states that with probability $1 - \delta$ and $m$ samples, we can bound the generalization gap for any model $h \in \mathcal{H}$ as

Bayesian Decision

Bayesian Decision: Find an optimal classifier according to the prior probability and class-conditional probability density of the feature

The a priori or prior probability reflects our knowledge of how likely we expect a certain state of nature before we can actually observe said state of nature.

The class-conditional probability density function is the probability

density function $P(x|\omega)$ for our feature $x$, given that the state/class is $\omega$

Posterior Probability is the probability of a certain state/class given

our observable feature $x$: $P(\omega | x)$

Minimum Prediction Error Principle. The optimal classifier $f(\cdot)$ should minimize the expected prediction error, defined as

So, for each $x$, we want

Therefore, the classifier just needs to pick the class with largest posterior probability.

We could use a decision threshold $\theta$ for diciding. Also we can avoid making decisions on the difficult cases in anticipation of a high error rate on those examples.

Density estimation

We need a method to estimate the distribution of each feature, this is called density estimation.

Parametric Density Estimation Method

We can assume that the density function follows some form, for example:

The unknown $\theta_i = (\mu_i, \sigma_i)$ is called the parameters.

Maximum Likelihood Estimation (MLE)

Likelihood Function: $p(x|\theta)$ measures the likelihood of a parametrized distribution to generate a sample $x$.

Max Likelihood Estimation (MLE): Choose the parameter 𝜃 that maximizes the

likelihood function for all the samples.

For example, if we use Gaussian to estimate $X = \{x_i\}_{i=1}^N$, MLE gives the result as

For the sake of simplicity, denote $H(\theta) = \ln p(X|\theta) = \sum_{i=1}^{N} \ln p(x_i|\theta)$

Non-parametric Density Estimation Method

Non-parametric method makes few assumptions about the form of the distribution and does not involve any parameter about the density function’s form.

Suppose totally we sample $N$ data, of which $K$ points are within $R$. Each data is

sample identically and independently. For each sample, whether it belongs to 𝑅 follows Bernoulli distribution with parameter $P_R$. We have $p(x) \approx \frac{P_R}{V} \approx \frac{K}{NV}$

We could apply kernel methods to it.

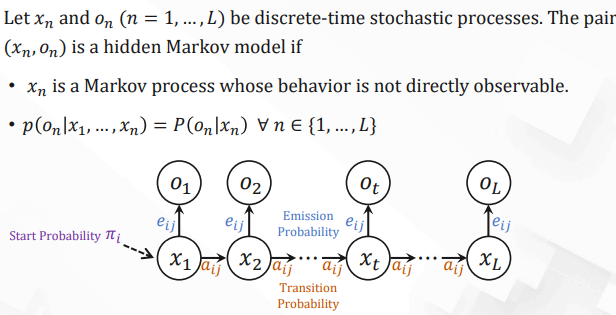

Hidden Markov Models (HMMs)

Understanding Bayes’ Rule:

- Prior $P(H)$ : How probable was our hypothesis before observing the evidence?

- Likelihood $p(E|H)$ : How probable is the evidence given that our hypothesis is true?

- Marginal $P(E)$: How probable is the new evidence?

| Notation | Explanation |

|---|---|

| $Q = \{q_1, \ldots, q_n\}$ | The set of $n$ hidden states. |

| $V = \{v_1, \ldots, v_v\}$ | The set of all possible observed values. |

| $A = [a_{ij}]_{n \times n}$ | Transition matrix. $a_{ij}$ is the probability of transitioning from state $i$ to state $j$. $\sum_{j=1}^n a_{ij} = 1 \, \forall i$. |

| $O = o_1 o_2 \cdots o_L$ | Observed sequence. $o_t \in V$. |

| $x = x_1 x_2 \cdots x_L$ | Hidden state sequence. $x_t \in Q$. |

| $E = [e_{ij}]_{n \times v}$ | Emission probability matrix. $e_{ij} = P(o = v_j \mid x = q_i)$ is the probability of observing $v_j$ at state $q_i$. $\sum_{j=1}^V e_{ij} = 1 \, \forall i$. |

| $\pi = [\pi_1, \pi_2, \ldots, \pi_n]$ | Start probability distribution. $\pi_i$ is the probability of Markov chain starting from $i$. $\sum_{i=1}^n \pi_i = 1$. |

Question #1 – Evaluation

The evaluation problem in HMM: Given a model $M$ and an observed sequence $O$, calculate the probability of the observed sequence $P(O|M)$ .

Forward Algorithm

Denote $\alpha_t(j)$ as the probability of observing $o_1 o_2 \ldots o_t$ and the hidden state at $t$ being $q_j$:

Obviously, $\alpha_t(j)$ can be rewritten as:

Define Initial Values:

Iterative solving:

Obtaining results:

Backward Algorithm

Denote $\beta_t(j)$ as the probability of observing $o_{t+1} o_{t+2} \ldots o_L$ and the hidden state at $t$ being $q_j$:

Obviously, $\beta_t(j)$ can be rewritten as:

Define Initial Values:

Iterative solving:

Obtaining results:

Question #2 – Decoding

The decoding problem in HMM: Given a model $M$ and an observed sequence $O$, calculate the most probable hidden state sequence $\mathbf{x} = \arg\max_{\mathbf{x}} p(\mathbf{x}, O | M)$.

Define:

According to the recurrence relation, rewrite the above as:

Therefore, the most probable hidden state sequence is:

Viterbi Algorithm

Define Initial Values:

Iterative solving:

Obtaining results:

Computational Complexity: $O(n^2 L)$

Question #3 – Learning

The learning problem in HMM: Given an observed sequence $O$, estimate the parameters of model: $M = \arg \max \limits_{M}P(M|O)$

For simplicity, in the following steps we only present the learning process of transition matrix $A$. (The other parameters can be learned in a similar manner.)

Baum-Welch Algorithm (a special case of EM algorithm)

- Expectation Step (E-step): Using the observed available data of the dataset, we estimate (guess) the values of the missing data with the current parameters $\theta_{\text{old}}$.

- Maximization Step (M-step): Using complete data generated after the E-step, we update the parameters of the model.

E-step

(#$T_{ij}$ denotes the times of hidden state transitioning from $q_i$ to $q_j$)

Generate the guesses of #$T_{ij}$, i.e., the expected counts:

Can be estimated with Forward Algorithm and Backward Algorithm.

M-step

Generate new estimations with the expected counts:

Estimation when hidden state is unknown.

Iterative Solving: Recalculate the expected counts with newly estimated parameters (E-step). Then generate newer estimations of $\theta$ with (M-step). Repeat until convergence.

Bayesian Networks

Naive Bayes

Naïve Bayes Assumption: Features $X_i$ are independent given class $Y$:

Inference: the label can be easily predicted with Bayes’ rule

$Y^*$ is the value that maximizes Likelihood $\times$ Prior.

When the number of samples is small, it is likely to encounter cases where $\text{Count}(Y = y) = 0$ or $\text{Count}(X_i = x, Y = y) = 0$. So we use Laplace Smoothing. The parameters of Naïve Bayes can be learned by counting:

- Prior:

- Observation Distribution

Here, $C$ is the number of classes, $S$ is the number of possible values that $X_i$ can take.

Learning & Decision on BN

Bayesian Network

BN$(G, \Theta)$: a Bayesian network

- $G$ is a DAG with nodes and directed edges.

- Each node represents a random variable. Each edge represents a causal relationship/dependency.

- $\Theta$ is the network parameters that constitute conditional probabilities.

- For a node $t$, its parameters are represented as $p(x_t \mid x_{\text{pa}(t)})$.

Joint probability of BN:

where $\text{pa}(t)$ is the set of all parent nodes of node $t$.

Learning on Bayesian Network

Notation: Suppose BN has $n$ nodes, we use $\text{pa}(t)$ to denote the parent nodes of $t$ $(t = 1, \ldots, n)$

By the conditional independence of BN, we have

Thus, the posterior becomes:

Learning BN with Categorical Distribution

Consider a case where each probability distribution in BN is categorical, In this case, we can model the conditional distribution of node $t$ as(We use a scalar value $c$ to represent parent nodes’ states for simplicity.):

and the conditional probability of node $t$ can be denoted as:

Categorical Distribution:

E.g., toss a coin $(d = 2)$, roll a die $(d = 6)$

Count the training samples where $x_t = k, x_{\text{pa}(t)} = c$:

According to the property of categorical distribution, we can represent the likelihood function as:

Thus the posterior can be further factorized:

Notation:

- $D_{tc}$ are the sample set where the value of $x_{\text{pa}(t)}$ is $c$

- $q_t$ is the number of possible values of $x_{\text{pa}(t)}$

- $K_t$ is the number of possible values of $x_t$

How to choose the probability distribution function for the prior $p(\theta_{tc})$? It would be highly convenient if the posterior shares the same form as the prior.

Conjugate Prior: A prior distribution is called a conjugate prior for a likelihood function if the posterior distribution is in the same probability distribution family as the prior.

The conjugate prior for the categorical distribution is the Dirichlet distribution:

Choosing the prior as conjugate prior — Dirichlet distribution:

$\alpha_{tck}$ are integers and are the hyperparameters of BN model.

In this case, the posterior can be easily derived as:

We can then derive an estimate of $\theta_{tck}$ by calculating the expectation:

K-Means Algorithm

- Initalize cluster centers $\mu_1, \cdots, \mu_k$ randomly.

Repeat until no change of cluster assignment

- Assignment step: Assign data points to closest cluster center

- Update Step: Change the cluster center to the average of its assigned points

Optimization View of K-Means

Optimization Objective: within-cluster sum of squares (WCSS)

Step 1: Fix $\mu$, optimize $r$

Step 2: Fix $r$, optimize $\mu$

Rule of Thumbs for initializing k-means

- Random Initialization: Randomly generate 𝑘 points in the space.

- Random Partition Initialization: Randomly group the data into 𝑘 clusters and

use their cluster center to initialize the algorithm. - Forgy Initialization: Randomly select 𝑘 samples from the data.

- K-Means++: Iteratively choosing new centroids that are farthest from the existing

centroids.

How to tell the right number of clusters?

We find the elbow point of the $J_e$ image.

EM Algorithm for Gaussian Mixture Model (GMM)

Multivariate Gaussian Distribution

$d$-dimensional Multivariate Gaussian:

- $\mu \in \mathbb{R}^d$ the mean vector

- $\Sigma \in \mathbb{R}^{d \times d}$ the covariance matrix

MLE of Gaussian Distribution

The likelihood function of a given dataset $X = \{x_1, x_2, \ldots, x_N\}$:

The maximum likelihood estimation (MLE) of the parameters is defined by:

The optimization problem of maximum likelihood estimation (MLE):

Solve the optimization by taking the gradient:

Gaussian Mixture Model (GMM)

A Gaussian Mixture Model (GMM) is the weighted sum of a family of Gaussians whose density function has the form:

- Each Gaussian $N(\mu_k, \Sigma_k)$ is called a component of GMM.

- Scalars $\{\pi_k\}_{k=1}^{K}$ are referred to as mixing coefficients, which satisfy

This condition ensures $p(x \mid \pi, \mu, \Sigma)$ is indeed a density function.

Soft Clustering with Mixture Model

By Bayes Rule, the posterior probability of $z$ given $x$ is:

We call $\gamma_k$ the responsibility of the $k$-th component on the data $x$.

Probabilistic Clustering: each data point is assigned a probability distribution over the clusters.

“$x$ belongs to the $k$-th cluster with probability $\gamma_k$”

MLE for Gaussian Mixture Model

Log-likelihood function of GMM

Maximum Likelihood Estimation

subject to:

Optimality Condition for $\mu$

Take partial derivative with respect to $\mu_k$,

Notice that the posterior of $z_n$ (also known as responsibility $\gamma_{n,k}$) can be written as

Thus

Optimality Condition for $\Sigma$

Similarly, take derivative with respect to $\Sigma_k$, which yields

Responsibility-reweighted Sample Covariance

Optimality Condition for $\pi$

Constraints of mixing coefficients $\pi$: $\sum_{k=1}^{K} \pi_k = 1$

Introduce Lagrange multiplier:

Take derivative with respect to $\pi_k$, which gives

By the constraints, we have $1 = \sum_{k=1}^{K} \pi_k = \frac{-1}{\lambda} \sum_{k=1}^{K} N_k$,

Also notice that

Therefore,

Expectation-Maximization (EM) Algorithm

- Initialize $\pi_k, \mu_k, \Sigma_k, \quad k = 1, 2, \ldots, K$

- E-Step: Evaluate the responsibilities using the current parameter values

- M-Step: Re-estimate the parameters using the current responsibilities

where $N_k = \sum_{n=1}^{N} \gamma_{n,k}$

- Return to step 2 if the convergence criterion is not satisfied.

Hierarchical Clustering

Distance Function: The distance function affects which pairs of clusters are merged/split and in what order.

- Single Linkage:

- Complete Linkage:

- Average Linkage:

Two Types of Hierarchical Clustering

Bottom-Up (Agglomerative)

- Start with each item in its own cluster, find the best pair to merge into a new cluster.

- Repeat until all clusters are fused together.

Top-Down (Divisive)

- Start with one all-inclusive cluster, consider every possible way to divide the cluster in two.

- Choose the best division and recursively operate on both sides.

Agglomerative (Bottom-up) Clustering

- Input: cluster distance measure $d$, dataset $X = \{x_n\}_{n=1}^{N}$, number of clusters $k$

- Initialize $\mathcal{C} = \{C_i = \{x_n\} \mid x_n \in X\}$ // Each point in separate cluster

- Repeat:

- Find the closest pair of clusters $C_i, C_j \in \mathcal{C}$ based on distance metric $d$

- $C_{ij} = C_i \cup C_j$ // Merge the selected clusters

- $\mathcal{C} = (\mathcal{C} \setminus \{C_i, C_j\}) \cup \{C_{ij}\}$ // Update the clustering

- Until $|\mathcal{C}| = k$

A naïve implementation takes space complexity $O(N^2)$, time complexity $O(N^3)$.

LASSO Regression

LASSO (Least Absolute Shrinkage and Selection Operator): Simply linear regression with an $\ell_1$ penalty for sparsity

sparse solution $\leftrightarrow$ feature selection

Principal Component Analysis (PCA)

Computing PCA: Eigenvalue Decomposition

Objective: Maximize variance of projected data

subject to $\mathbf{u}_j^T \mathbf{u}_j = 1$, $\mathbf{u}_j^T \mathbf{u}_k = 1$, $k < j$

Observation: PC $j$ is direction of the $j$-th largest eigenvector of $\frac{1}{n} \mathbf{X}^T \mathbf{X}$

Eigenvalue Decomposition:

are eigenvectors of $\frac{1}{n} \mathbf{X}^T \mathbf{X}$

Manifold Learning

Geodesic distance: lines of shortest length between points on a manifold

Classical Neural Networks

Forward propagation

Backpropagation: Gradient Computation

Apply the chain rule to compute gradients.

Summary of backpropagation:

(No $\delta^{(1)}$)

- Based on $\delta^{(l)}$, $\frac{\partial J(\Theta)}{\partial \Theta^{(l)}}$ can be computed as:

For example, the activation function $g(x)$ is sigmoid, i.e., $g(x) = \frac{1}{1+e^{-x}}$ and $g’(x) = g(x)(1 - g(x))$.

For example, $J(\Theta)$ is the cross-entropy loss for binary classification, i.e.,

and

Optimization of Deep Networks

Vanilla Gradient Descent

Core: Compute the gradient of the loss function $g_t = \nabla \mathcal L$ on all training samples

Stochastic Gradient Descent

Core: Select a sample $(\mathbf x_i, y_i)$ from the training set and compute the gradient of the loss function $g_t = \nabla \mathcal L$ on the selected sample.

Mini-batch Gradient Descent

Core Randomly select $b$ samples $\{(\mathbf x_i, y_i)\}_{i \in [1, n]}$ and compute the gradient of the loss function $g_t = \nabla \mathcal L$ on the selected sample.

Gradient Descent with Momentum

where

The momentum term ($\mathbf{v}_t$) accumulates the gradients from the past several steps.

Adaptative Gradient (AdaGrad)

Particularly, it tends to assign higher learning rates to infrequent features, which ensures that the parameter updates rely less on frequency and more on relevance.

In AdaGrad, the parameters are updated as:

where

$\epsilon$ is a small number to ensure numerical stability.

Here, $.*$ is the element-wise product, and $\mathbf{g}_t = \nabla \mathcal{L}(\Theta_t)$.

Root Mean Square Propagation (RMSProp)

RMSProp changes the gradient accumulation in AdaGrad into an exponentially weighted moving average.

This method uses an exponentially decaying average to discard history from the extreme past so that it can converge rapidly after finding a convex bowl, as if it were an instance of the AdaGrad algorithm initialized within that bowl.

- The update rule is denoted as:

where

$r$ is the moving average of squared gradients, $\beta$ is the decay rate.

Adaptive Moment Estimation (Adam)

Adam extends the RMSProp method by making use of first moments of gradients, instead of second moment only in RMSProp.

- Adam can be seen as a variant of combination of RMSProp and Momentum with a few distinctions:

First-order Moment:

Second-order Moment:

Considering the first-order moment $s_t = \beta_1 s_{t-1} + (1 - \beta_1) g_t$, we start by initializing $s_0 = 0$, then:

Note that we initialized $s_0 = 0$, this causes significant amount of bias initially towards smaller values. We can use the fact that $\sum_{i=0}^{t-1} \beta_1^i = \frac{1 - \beta_1^t}{1 - \beta_1}$ to re-normalize the terms, and get:

The same method can be performed in the second-order moments, we get:

Finally, Adam combines the bias-corrected first and second-order moments and updates the parameters as:

Convolutional Neural Networks

Convolution Layer

The convolution operator preserves the spatial structure of image. Different filters extract different features from the original image.

Pooling Layer

The pooling layer is a downsampling operation, typically applied after a convolution layer, which does some spatial invariance.

Commonly used pooling operations: Max Pooling and Average Pooling

Batch Normalization Layer

Batch Normalization alleviate the problem of gradient vanishing.

Fully Connected Layer (FC) or Dense Layer

The fully connected layer operates on a flattened input where each input is

connected to all neurons.

If present, FC layers are usually found towards the end of CNN architectures and

can be used to optimize objectives such as class scores

Word Embedding

Build a dense vector for each word, chosen so that it is similar to vectors of

words that appear in similar contexts, measuring similarity as the vector dot

product, such a representation is called work embedding or word vector.

Word2Vec

Idea: We have a large corpus (“body”) of text: a long list of words. Every word in a fixed vocabulary is represented by a vector. Go through each position t in the text, which has a center word $c$ and context (“outside”) words $o$. Use the similarity of the word vectors for $c$ and $o$ to calculate the probability of $o$ given $c$. Keep adjusting the word vectors to maximize this probability.

Word2vec: Objective Function

- For each position $t = 1, \ldots, T$, predict context words within a window of fixed size $m$, given center word $w_t$.

- In our case, each word is represented as a parameter vector $\theta_i$.

- $\theta = [\theta_1, \ldots, \theta_V]$ represents all the parameters of $V$-many words.

- The objective function $J(\theta)$ is the (average) negative log likelihood:

Word2vec: Prediction Function

- How to calculate $P(w_{t+j} | w_t; \theta)$?

- Softmax function

- We will use two vectors per word $w$

- $v_w$ when $w$ is a center word; $u_w$ when $w$ is a context word.

- $V$: the set of all possible words

- Dot product compares similarity of $o$ and $c$.

- Exponentiation makes anything positive.

- Normalize over entire vocabulary to give probability distribution.

Language Modeling

A language model is a probability distribution over sequences of words, e.g., predicting what word comes next. A system that does this is called a Language Model.

Recurrent Neural Network(RNN)

Need a neural network that can process any length input? Apply the same weights repeatedly.

Training RNN

Get a big corpus of text which is sequences of words

Sample a (batch of) sequence of length ( T ) into RNN-LM; compute output distribution ( \hat{y}^{(t)} ) for every step ( t ), i.e., predict probability distribution of every word, given words so far.

Average this to get overall loss for a sentence (actually, a batch of sentences):

Backpropagation for RNNs: backpropagation through time

Apply the multivariable chain rule:

The gradient w.r.t. a repeated weight is the sum of the gradient w.r.t. each time it appears.

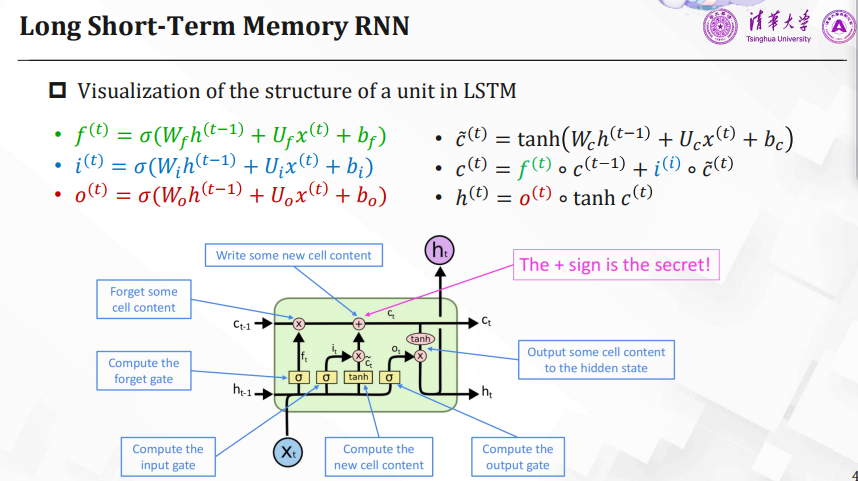

Long Short-Term Memory RNN

The key to LSTMs is the cell state, the hidden state stores short-term information; the cell stores long-term information.

The cell runs straight down the entire chain, with only some minor linear interactions. It’s very easy for information to just flow along it unchanged.

LSTM – Forget Gate

Gating mechanisms: Control which information is erased/written/read from the cell

- On each timestep, each element of the gates can be open (1), closed (0), or somewhere in-between (Sigmoid function).

- The gates are dynamic; their value is computed based on the current context.

Forget gate:

Controls what is forgotten from the previous cell state

LSTM – Input Gate

New cell content:

The content that will be added to the cell state

Input gate:

Controls what parts of the new cell content are written to the cell

LSTM – Update Cell State

Cell state: Forget some content from the last cell state; input some new content to the cell state

LSTM – Output Gate

Output gate: Controls what parts of cell content are output to hidden state

Hidden state: Output some content from the cell as hidden state

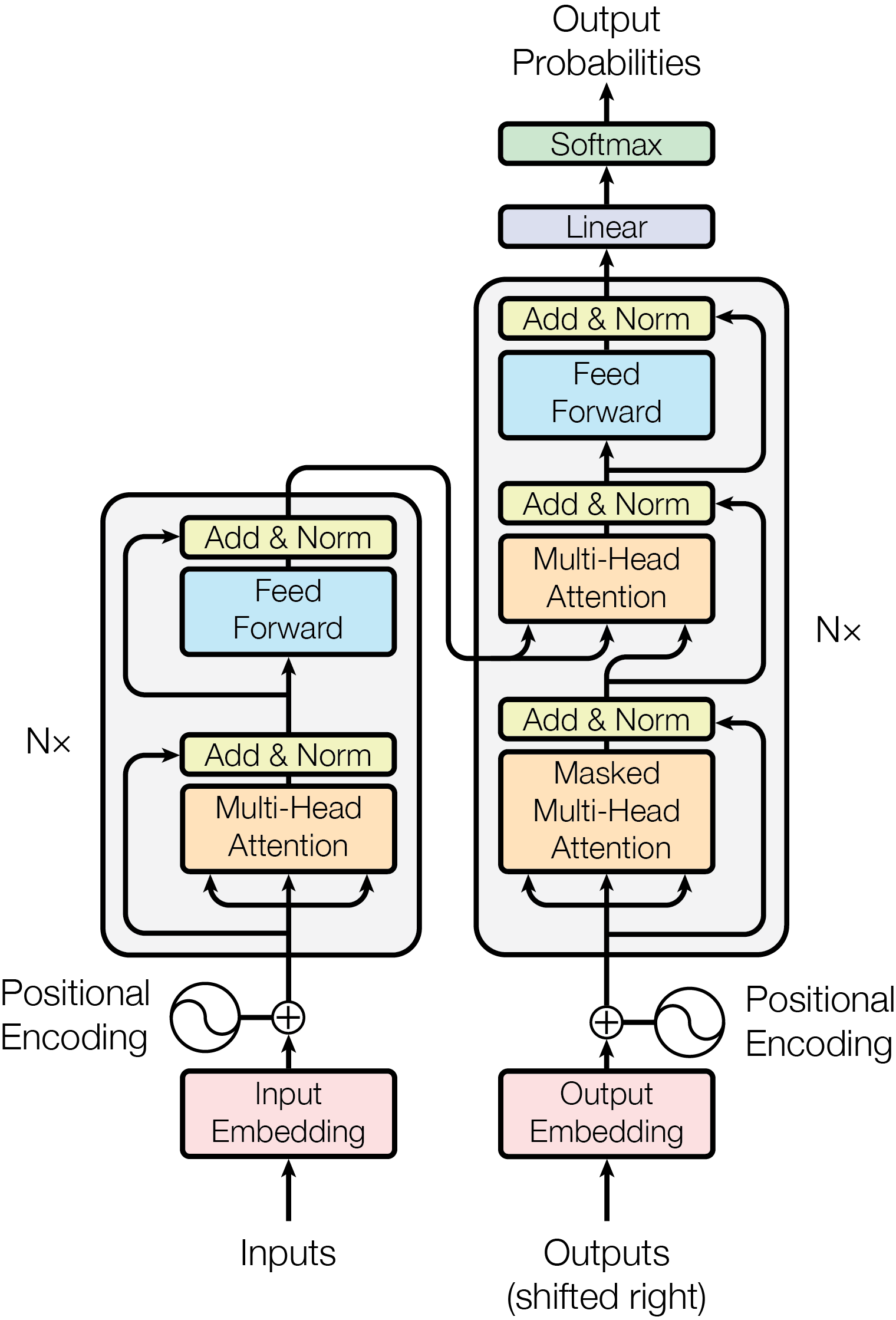

Transformers

Attention

- Attention: directly model relationships between any two positions in the input sequence, regardless of their distance.

- Let the sequence be $w_{1:n}$. For each word $w_i$, let $x_i$ be its word embedding.

- Each word vector (embedding) is transformed into three vectors query, key, value.

Matrices (Q, K, V) are learnable parameters.

Transformer Encoder

Position encoding

Since self-attention doesn’t build the order information, we need to encode the order of the sentence in word embeddings.

Consider representing each sequence index as $p_i \in \mathbb{R}^d$

$x_i$ is word embedding, $\tilde{x}_i$ is positioned word embedding. $p_i$ can be a sinusoidal function or learnable parameters.

Multi-head attention

Define multiple attention “heads” through multiple $Q$, $K$, $V$ matrices.

Each attention head performs attention independently.

Then the outputs of all the heads are combined.

Residual connection

A trick from ResNet to help models train better.

Layer normalization

A trick to stabilize the training.

$\mu, \sigma$ is the mean and standard deviation of $x \in \mathbb{R}^d$

Feed-forward network

Self-attention is just the linear combination of values.

Feed-forward network introduces nonlinearity.

Transformer Decoder

Masked attention: Mask out attention to future words by setting attention scores to $-\infty$

For any current word $i$ and future word $j$ ($i < j$), we have the attention weight

Cross-attention: The queries are drawn from the decoder, the keys and values are drawn from the encoder. Establish a connection between the source (input) and target (output) sequences.

Variational Autoencoder(VAE)

Let’s turn the autoencoder into a probabilistic model.

The encoder encodes the input data into a distribution of the latent space instead of a single point in latent space.

The decoder maps any latent code to a meaningful data distribution

VAE: Generative Process

WE assume each data point is generated by the following two steps:

- Sample latent variable $z$ from its prior distribution $p(z)$

- Generate $x$ by the conditional model $p_{\theta}(x \mid z)$

The prior distribution $p(z)$ is usually simple, say $p(z) \sim N(0, I)$

The likelihood $p_{\theta}(x)$ is intractable. However, with the help of an encoder $q_{\phi}(z | x)$, we can obtain a tractable lower bound of the likelihood.

The model can be trained by maximizing this lower bound.

The Evidence Lower Bound (ELBO)

The formula for the Kullback-Leibler (KL) divergence:

The KL divergence from distribution $Q$ to distribution $P$ is defined as:

For discrete probability distributions, it is defined as:

In the context of variational autoencoders, where $q_{\phi}(z|x)$ is the approximate posterior and $p_{\theta}(z|x)$ is the true posterior, the KL divergence is given by:

Notice that

The gap between log-likelihood and ELBO = distance between true posterior and $q_{\phi}$

Encoder and decoder are jointly trained to maximize the evidence lower bound.

This type of DGM is called Variational Autoencoder (VAE).

Further Analysis for ELBO

ELBO is tractable and differentiable.

Reconstruction Loss:

The reconstruction error of sending $x$ through the encoder and decoder.

Prior Regularization:

Make approximate posterior close to prior.

Prior Regularization

Make approximate posterior distribution closer to prior.

- It prevents the “overfitting” of the autoencoder.

Suppose a standard Gaussian prior and a Gaussian decoder:

The KL-divergence between Gaussians has closed-form solution:

Reconstruction Loss

Suppose the encoder and decoder are both Gaussian

(Decoder has fixed variance for simplicity)

The likelihood function:

The reconstruction loss can be approximated via Monte Carlo methods:

where $C_1 = \frac{1}{2 \sigma^2 K}$

$K$ is the number of MC samples.

VAE: Putting together

Training

- Forward Encoder, compute regularization term

- Sample $z \sim q_{\phi}(z | x)$ with reparameterization trick.

- Forward decoder, compute reconstruction term

- Maximize ELBO with gradient ascent

Sampling

- Discard the encoder

- Sample latent from prior

- Forward decoder

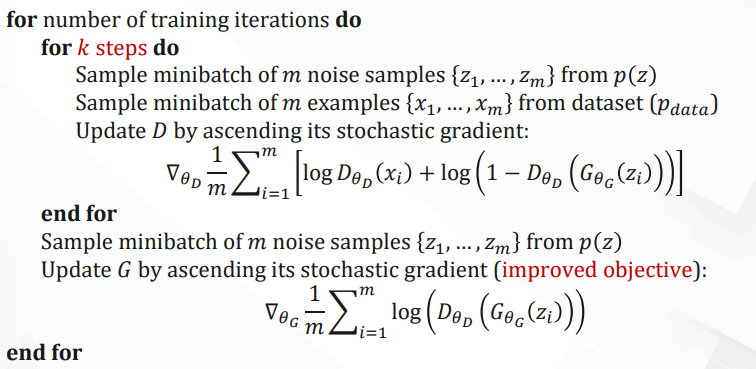

Generative Adversarial Network

A generative adversarial network (GAN) consists of:

- A discriminator $D(x)$

- A generator $G(z)$

$D$ is a binary classifier that tries to discriminate between a sample from the data distribution and a sample from the generator $G$.

$G$ tries to “trick” $D$ by generating samples that are hard for $D$ to distinguish from data.

Min-max objective:

For a fixed generator $G$, the optimal discriminator $D^*$ is

Now consider the min-max objective:

Where $\text{JSD}$ is Jensen-Shannon Divergence:

Thus we have the Unique global minimum

For training we use Gradient ascent on generator, with objective

Diffusion Probabilistic Models

Forward Diffusion Process

Given a data point sampled from real distribution $x_0 \sim q(x_0)$, define a forward diffusion process in which we add small amount of Gaussian noise to the sample in $T$ steps, producing noisy samples $x_1, \ldots, x_T$. The step sizes are controlled by $\beta_t$.

Let $\alpha_t = 1 - \beta_t, \bar{\alpha}_t = \prod_{i=1}^{t} \alpha_t$. Then we have

Backward Diffusion Process

Core idea: Learn to map noise to data by reversing the time.

To reverse the diffusion process, we need to estimate the reverse conditional probabilities $q(x_{t-1} | x_t)$. Note that if $\beta_t$ is small enough, $q(x_{t-1} | x_t)$ will also be Gaussian. Learn a model $p_{\theta}(x_{t-1} | x_t)$ to approximate the reverse process $q(x_{t-1} | x_t)$.

DPM: Putting Together

Training Algorithm

repeat

- $x_0 \sim q(x_0)$

- $t \sim \text{Uniform}(\{1, \ldots, T\})$

- $\epsilon \sim \mathcal{N}(0, I)$

- Take gradient descent step on

until converged

Sampling Algorithm

$x_T \sim \mathcal{N}(0, I)$

for $t = T, \ldots, 1$ do

- $z \sim \mathcal{N}(0, I)$ if $t > 1$ else $z = 0$

end for

return $x_0$

Contrastive Representation Learning

We want a feature extractor $f$ and a score function $S$, such that

Here, $x$ is the reference sample, $x^+$ is positive sample and $x^-$ is negative sample.

Given a chosen score function $S(\cdot)$, we aim to learn an encoder function $f$ that yields high score for positive pairs $(x, x^+)$ and low scores for negative pairs $(x, x^-)$.

Commonly known as InfoNCE loss

A lower bound on the mutual information between $f(x)$ and $f(x^+)$

Therefore, the larger total samples $N$ is, the lower loss $L$ is, $f(x)$ and $f(x^+)$ are more correlated.